|

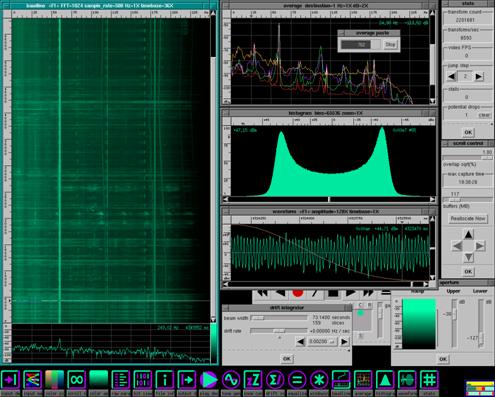

StarFish© Very High Speed Processing Embedded systems often have to process real-time data coming from the environment. The amount of data can be massive either by its nature, either by the fact that a large number of channels are sampled. Also to extract meaningful information (e.g. object recognition) very complex and processing intensive algorithms are needed, often necessitating the use of parallel processing hardware. This is the domain of embedded supercomputing. This domain is often even more constrained by power and size restricting because the embedded computer is based in a difficult environment. OpenComRTOS was designed with such boundary conditions in mind. |